Our world produces a massive amount of data daily, so any tool we can get that can make data processing less painful is a welcome relief. Fortunately, plenty of data processing tools and services are available.

So today, we’re focusing on Amazon Kinesis. We will explore what Amazon Kinesis is and its uses, limits, benefits, and features. We will also look at Amazon Kinesis data streams, explain kinesis data analytics, and compare Kinesis with other resources such as SQS, SNS, and Kafka.

So then, what is Amazon Kinesis anyway?

What is Amazon Kinesis?

Amazon Kinesis is a series of managed, cloud-based services dedicated to collecting and processing streaming data in real-time. To quote the AWS kinesis webpage, “Amazon Kinesis makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information. Amazon Kinesis offers key capabilities to cost-effectively process streaming data at any scale, along with the flexibility to choose the tools that best suit the requirements of your application. With Amazon Kinesis, you can ingest real-time data such as video, audio, application logs, website clickstreams, and IoT telemetry data for machine learning, analytics, and other applications. Amazon Kinesis enables you to process and analyze data as it arrives and respond instantly instead of having to wait until all your data is collected before the processing can begin.”

Amazon Kinesis consists of four specialized services or capabilities:

- Kinesis Data Streams (KDS): Amazon Kinesis Data Streams capture streaming data generated by various data sources in real-time. Then, producer applications write to the Kinesis Data Stream, and consumer applications connected to the stream read the data for different types of processing.

- Kinesis Data Firehose (KDF): This service precludes the need to write applications or manage resources. Users configure data producers to send data to the Kinesis Data Firehose, which automatically delivers the data to the specified destinations. Users can even configure Kinesis Data Firehose to change the data before sending it.

- Kinesis Data Analytics (KDA): This service lets users process and analyze streaming data. It provides a scalable, efficient environment that runs applications built using the Apache Flink framework. In addition, the framework offers helpful operators like aggregate, filter, map, window, etc., for querying streaming data.

- Kinesis Video Streams (KVS): This is a fully managed service used to stream live media from audio or video capture devices to the AWS Cloud. It can also build applications for real-time video processing and batch-oriented video analytics.

We will examine each service in more detail later.

What Are the Limits of Amazon Kinesis?

Although the above description sounds impressive, even Kinesis has limitations and restrictions. For instance:

- Stream records can be accessed up to 24 hours by default and extended up to seven days by enabling extended data retention.

- You can create, by default, up to 50 data streams with the on-demand capacity mode within your Amazon Web Services account. If you need a quota increase, you must contact AWS support.

- The maximum size of the data payload before Base64-encoding (also called a data blob) in one record is one megabyte (MB).

- You can switch between on-demand and provisioned capacity modes for each data stream in your account twice within 24 hours.

- One shard supports up to 1000 PUT records per second, and each shard supports up to five read transactions per second.

How Do You Use Amazon Kinesis?

It’s easy to set up Amazon Kinesis. Follow these steps:

- Set up Kinesis

- Sign in to your AWS account, then select Amazon Kinesis in the Amazon Management Console.

- Click on the Create stream and fill in all the required fields. Then, click the “Create” button.

- You’ll now see the Stream in the Stream List

- Set up users: This phase involves using Create New Users and assigning policies to each one.

- Connect Kinesis to your application: Depending on your application (Looker, Tableau Server, Domo, ZoomData), you will probably need to refer to your Settings or Administrator windows and follow the prompts, typically under “Sources.”

What Are the Features of Amazon Kinesis?

Here are Amazon Kinesis’s most prominent features:

- It’s cost-effective: Kinesis uses a pay-as-you-go model for the resources you need and charges hourly for the throughput required.

- It’s easy to use: You can quickly create new streams, set requirements, and start streaming data.

- You can build Kinesis applications: Developers get client libraries that lets them design and operate real-time data processing applications.

- It has security: You can secure your data at-rest by using server-side encryption and employing AWS KMS master keys on any sensitive data within the Kinesis Data Streams. You can access data privately through your Amazon Virtual Private Cloud (VPC).

- It has elastic, high throughput, and real-time processing: Kinesis allows users to collect and analyze information in real-time instead of waiting for a data-out report.

- It integrates with other Amazon services: For example, Kinesis integrates with Amazon DynamoDB, Amazon Redshift, and Amazon S3.

- It’s fully managed: Kinesis is fully managed, so it runs your streaming applications without you having to manage any infrastructure.

All About Kinesis Data Streams (KDS)

Amazon Kinesis Data Streams collect and store streaming data in real-time. Streaming data is collected from different sources by producer applications from various sources and then pushed continuously into a Kinesis Data Stream. Also, the consumer applications can read the data from the KDS and process it in real-time.

Data stored in Kinesis Data Stream last 24 hours by default but can be reconfigured for up to 365 days.

As part of their processing, consumer applications can save their results using other AWS services like DynamoDB, Redshift, or S3. In addition, these applications process the data in real or near real-time, making the Kinesis Data Streams service especially valuable for creating time-sensitive applications such as anomaly detection or real-time dashboards.

KDS is also used for real-time data aggregation, followed by loading the aggregated data into a data warehouse or map-reduce cluster

Defining Streams, Shards, and Records

A stream is made up of multiple data carriers called shards. The data stream’s total capacity is the sum of the capacities of all the shards that make it up. Each shard provides a set capacity value and features a data record sequence. Data stored in the shard is known as a record; each shard has a series of data records. Each data record gets a sequence number assigned by the Kinesis Data Stream.

Creating a Kinesis Data Stream

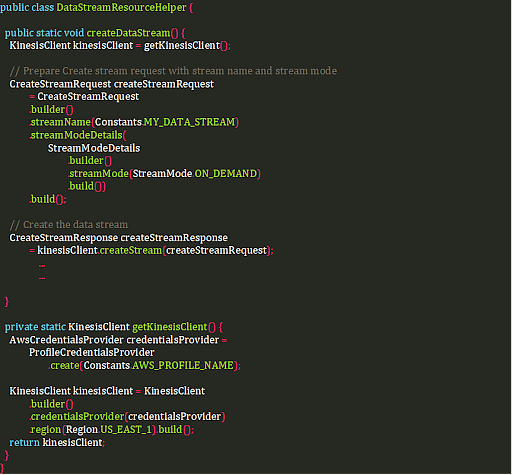

You can create a Kinesis data stream from the AWS Command Line Interface (CLI) or using the AWS Kinesis Data Streams Management Console, using the CreateStream operation of Kinesis Data Streams API from the AWS SDK. You can also use AWS CloudFormation or AWS CDK to create a data stream as part of an infrastructure-as-code project.

For illustrative purposes, here’s a code sample. This sample creates a data stream with the Kinesis Data Streams API, including an “on-demand” mode.

Kinesis Data Stream Records

These records have:

- A sequence number: A unique identifier assigned to each record by the KDS.

- A partition key: This key segregates and routes records to different shards in a stream.

- A data blob: The blob’s maximum size is 1 megabyte.

Data Ingestion: Writing Data to Kinesis Data Streams

Applications that write data to the KDS streams are known as “producers.” Users can custom-build producer applications in a supported programming language using AWS SDK or the Kinesis Producer Library (KPL). Users can also use Kinesis Agent, a stand-alone application that runs as an agent on Linux-based server environments like database servers, log servers, and web servers.

Data Consumption: Reading Data from Kinesis Data Streams

This stage involves creating a consumer application that processes data from a data stream.

Consumers of Kinesis Data Streams

The Kinesis Data Streams API is a low-level method of reading streaming data. Users must poll the stream, checkpoint processed records, run multiple instances, and do other tasks using the Kinesis Data Streams API to conduct operations on a data stream. As a result, it’s practical to create a consumer application to read stream data. Here’s a sample of possible applications:

- AWS Lambda

- Kinesis Client Library (KCL)

- Kinesis Data Firehose

- Kinesis Data Analytics

Throughput Limits: Shared vs. Enhanced Fan-Out Consumers

Users must bear in mind the Kinesis Data Stream's throughput limits in designing and operating a very reliable data streaming system and ensuring predictable performance.

Remember, the data capacity of a data stream is a function of the number of shards in the stream, and a shard supports 1 MB per second and 1,000 records per second for write throughput and 2 MB per second for read throughput. When multiple consumers read from a shard, this read throughput is shared amongst them. These consumers are known as “Shared fan-out consumers.” However, if users want dedicated throughput for their consumers, they can define the latter as “Enhanced fan-out consumers.”

The Kinesis Data Firehose

The Kinesis Data Firehose is a fully managed service and the easiest method of loading streaming data into data stores and analytics tools. The firehose captures, transforms, and loads streaming data and allows near real-time analytics when using existing business intelligence (BI) tools and dashboards.

Creating a Kinesis Firehose Delivery Stream

You can create a Firehose delivery stream using the AWS SDK, AWS management console, or with infrastructure as a service such as AWS CloudFormation and AWS CDK.

Transmitting Data to a Kinesis Firehose Delivery Stream

You can send data to a firehose from several different sources:

- Kinesis Data Stream

- Kinesis Firehose Agent

- Kinesis Data Firehose API

- Amazon CloudWatch Logs

- CloudWatch Events

- AWS IoT as the data source

Explaining Data Transformation in a Firehose Delivery Stream

Users can configure the Kinesis Data Firehose delivery stream, transforming and converting streaming data from the data source before delivering transformed data to its destination via these two methods:

- Transforming Incoming Data

- Converting the Incoming Data Records Format.

Firehose Delivery Stream Data Delivery Format

After the delivery stream receives the streaming data, it is automatically delivered to the configured destination. Each destination type that the Kinesis Data Firehose supports has its specific configuration for data delivery.

Explaining Kinesis Data Analytics

Kinesis Data Analytics is the simplest way to analyze and process real-time streaming data. It feeds real-time dashboards, generates time-series analytics, and creates real-time notifications and alerts.

What’s The Difference Between Amazon Kinesis Data Streams and Kinesis Data Analytics?

We use Kinesis Data Streams to write consumer applications with custom code designed to perform any needed streaming data processing. However, those applications typically run on server instances like EC2 in an infrastructure we provision and manage. On the other hand, Kinesis Data Analytics provides an automatically provisioned environment designed to run applications that are built with the Flink framework, which automatically scales to handle any volume of incoming data.

Also, Kinesis Data Streams consumer applications usually write records to a destination such as an S3 bucket or a DynamoDB table once some processing is done. Kinesis Data Analytics, however, features applications that perform queries like aggregations and filtering by applying different windows on the streaming data. This process identifies trends and patterns for real-time alerts and dashboard feeds.

Flink Application Structure

Flink applications consist of:

- Execution Environment: This environment is defined in the main application class, and it's responsible for creating the data pipeline, which contains the business logic and consists of one or more operators chained together.

- Data Source: The application consumes data by referring to a source, and a source connector reads data from the Kinesis data stream, Amazon S3 bucket, or other such resources.

- Processing Operators: The application processes data by employing processing operators, transforming the input data that originates from the data sources. Once the transformation is complete, the application forwards the changed data to the data sinks.

- Data Sink: The application generates data to external sources by employing sinks. Sink connectors write data to a Kinesis Data Firehose delivery stream, a Kinesis data stream, an Amazon S3 bucket, or other such destination.

Creating a Flink Application

Users create Flink applications and run them using the Kinesis Data Analytics service. Users can create these applications in Java, Python, or Scala.

Configuring a Kinesis Data Stream as Both a Source and a Sink

Once the data pipeline is tested, users can modify the data source in the code, so it connects to a Kinesis Data Stream, which ingests the streaming data that must be processed.

Deploying a Flink Application to Kinesis Data Analytics

Kinesis Data Analytics creates jobs to run Flink applications. The jobs look for a compiled source in an S3 bucket. Therefore, once the application is compiled and packaged, users must create an application in Kinesis Data Analytics configuring the following components:

- Input: Users map the streaming source to an in-application data stream, and the data flows from a number of data sources and into the in-application data stream.

- Application code: This element consists of the location of an S3 bucket that has the compiled Flink application. The application reads from an in-application data stream associated with a streaming source, then writes to an in-application data stream associated with output.

- Output: This component consists of one or more in-application streams that store intermediate results. Users can then optionally configure the application's output to persist data from specific in-application streams and send it to an external destination.

Using Job Creation to Run the Kinesis Data Analytics Application

Users can run an application by choosing “Run” on the application’s page found in the AWS console. When the users run a Kinesis Data Analytics application, the Kinesis Data Analytics service creates an Apache Flink job. The Job Manager manages the job’s execution and the resources it uses. It also divides the implementation of the application into tasks, and a task manager, in turn, oversees each task. Users monitor application performance by examining the performance of each task manager or job manager.

Creating Flink Application Interactively with Notebooks

Users can employ notebooks, a tool typically found in data science tasks, to author Flink applications. A notebook is defined as a web-based interactive development environment used by Data scientists to write and execute code and visualize results. Studio notebooks can be created in the AWS Management Console.

All About Kinesis Video Streams (KVS)

The Kinesis Video Stream is defined as a fully managed service used to:

- Connect and stream audio, video, and other time-encoded data from different capturing devices. This process uses an infrastructure provisioned dynamically within the AWS Cloud.

- Build applications that function on live data streams using the ingested data frame-by-frame and in real-time for low-latency processing.

- Securely and durably store any media data for a default retention period of one day and a maximum of 10 years.

- Create ad hoc or batch applications that function on durably persisted data without strict latency requirements.

The Key Concepts: Producer, Consumer, and Kinesis Video Stream

The service is built around the idea of a producer sending the streaming data to a stream, then a consumer application reading the transmitted data from the stream. The chief concepts are:

- Producer: This can be any video-generating device, like a security camera, a body-worn camera, a smartphone camera, or a dashboard camera. Producers can also send non-video data, such as images, audio feeds, or RADAR data. Basically, this is any source that puts data into the video stream.

- Kinesis Video Stream: This resource transports live video data, optionally stores it, then makes the information available for consumption in real-time and on an ad hoc or batch basis.

- Consumer: The consumer is an application that reads fragments and frames from the KVS for viewing, processing, or analysis.

Creating a Kinesis Video Stream

Users can create a Kinesis Video Stream with the AWS Admin Console.

Sending Media Data to a Kinesis Video Stream

Next, users must configure a producer to place the data into the Kinesis Video Stream. The producer employs a Kinesis Video Streams Producer SDK that pulls the video data (in the form of frames) from media sources and uploads it to the KVS. This action takes care of every underlying task required to package the fragments and frames created by the device's media pipeline. The SDK can also handle token rotation for secure and uninterrupted streaming, stream creation, processing acknowledgments returned by Kinesis Video Streams, and other jobs.

Consuming Media Data from a Kinesis Video Stream

Finally, users consume the media data by viewing it in an AWS Kinesis Video Stream console or by building an application that can read the media data from a Kinesis Video Stream. The Kinesis Video Stream Parser Library is a toolset used in Java applications to consume the MKV data from a Kinesis Video Stream.

Apache Kafka vs AWS Kinesis

Both Kafka and Kinesis are designed to ingest and process multiple large-scale data streams of data from disparate and flexible sources. Both platforms replace traditional message brokers by ingesting large data streams that must be processed and delivered to different services and applications.

The most significant difference between Kinesis and Kafka is that the latter is a managed service that needs only minimal setup and configuration. On the other hand, Kafka is an open-source solution that requires a lot of investment and knowledge to configure, typically requiring setup times that go on for weeks, not hours.

Amazon Kinesis uses critical concepts such as Data Producers, Data Consumers, Data Records, Data Streams, Shards, Partition Key, and Sequence Numbers. As we’ve already seen, it consists of four specialized services.

Kafka, however, uses Brokers, Consumers, Records, Producers, Logs, Partitions, Topics, and Clusters. Additionally, Kafka consists of five core APIs:

- The Producer API allows applications to send data streams to topics in the Kafka cluster.

- The Consumer API lets applications read data streams from topics in the Kafka cluster.

- The Streams API transforms data streams from input topics to output topics.

- The Connect API allows implementing connectors to continually pull from some source system or application into Kafka or to push from Kafka into some sink system or application.

- The AdminClient API permits managing and inspecting brokers, topics, and other Kafka-related objects.

Also, Kafka offers SDK support for Java, while Kinesis supports Android, Java, Go, and .Net, as well as others. Kafka is more flexible, allowing users greater control over configuration details. However, Kinesis’s rigidity is a feature because that inflexibility means standardized configuration, which in turn is responsible for the rapid setup time.

SQS vs. SNS vs. Kinesis

Here’s a quick comparison between SQS (Amazon Simple Queue Service), SNS (Amazon Simple Notification Service), and Kinesis:

SQS |

SNS |

Kinesis |

|

Consumers pull the data.

|

Pushes data to many subscribers. |

Consumers pull data.

|

|

Data gets deleted after it’s consumed. |

You can have up to 10,000,000 subscribers. |

You can have as many consumers as you need. |

|

You can have as many workers (or consumers) as you need.

|

Data is not persisted, meaning it’s lost if not deleted.

|

It’s possible to replay data. |

|

There’s no need to provision throughput.

|

It has up to 10,000,000 topics.

|

It’s meant for real-time big data, analytics, and ETL. |

|

No ordering guarantee, except regarding FIFO queues

|

There’s no need to provision throughput. |

The data expires after a certain number of days. |

|

Individual message delay

|

It integrates with SQS for a fan-out architecture pattern. |

You must provision throughput. |

Our Data Engineering PG program is delivered via live sessions, industry projects, masterclasses, IBM hackathons, and Ask Me Anything sessions and so much more. If you wish to advance your data engineering career, enroll right away!

Do You Want to Become a Data Engineer?

If the prospect of working with data in ways like what we've shown above interests you, you might enjoy a career in data engineering! Simplilearn offers a Post Graduate Program in Data Engineering, held in partnership with Purdue University and in collaboration with IBM, that will help you master all the crucial data engineering skills.

This bootcamp is perfect for professionals, covering essential topics like the Hadoop framework, Data Processing using Spark, Data Pipelines with Kafka, Big Data on AWS, and Azure cloud infrastructures. Simplilearn delivers this program via live sessions, industry projects, masterclasses, IBM hackathons, and Ask Me Anything sessions.

Glassdoor reports that a Data Engineer in the United States may earn a yearly average of $117,476.

So, visit Simplilearn today and start a challenging yet rewarding new career!