In 2015, PayPal had to pay $7.7 million to the US government when their lack of proper screening mechanisms led to 500 PayPal transactions worth $44,000, violating sanctions against Iran, Cuba, and Sudan.

In 2018, Samsung Securities incurred a cost of $105 billion when an employee issued 2 billion shares to 2,018 company employees instead of dividends totaling 2 billion won (South Korean currency).

Bad data costs companies billions of dollars every year. That’s why you need a data validation tool to ensure your data is accurate, consistent, and reliable.

What are Data Validation Tools?

Data validation tools automatically check and verify data for accuracy, completeness, and conformity to predefined standards. An organization can set up certain rules or conditions like including data from up to five years for analysis. Any value that doesn’t meet the specified criteria will be excluded. These tools meticulously examine and scrutinize the data by employing various functions to flag and rectify discrepancies.

Let’s review the top 7 data validation tools to help you choose the solution that best suits your business needs.

Top 7 Data Validation Tools

- Astera

- Informatica

- Talend

- Datameer

- Alteryx

- Data Ladder

- Ataccama One

1. Astera

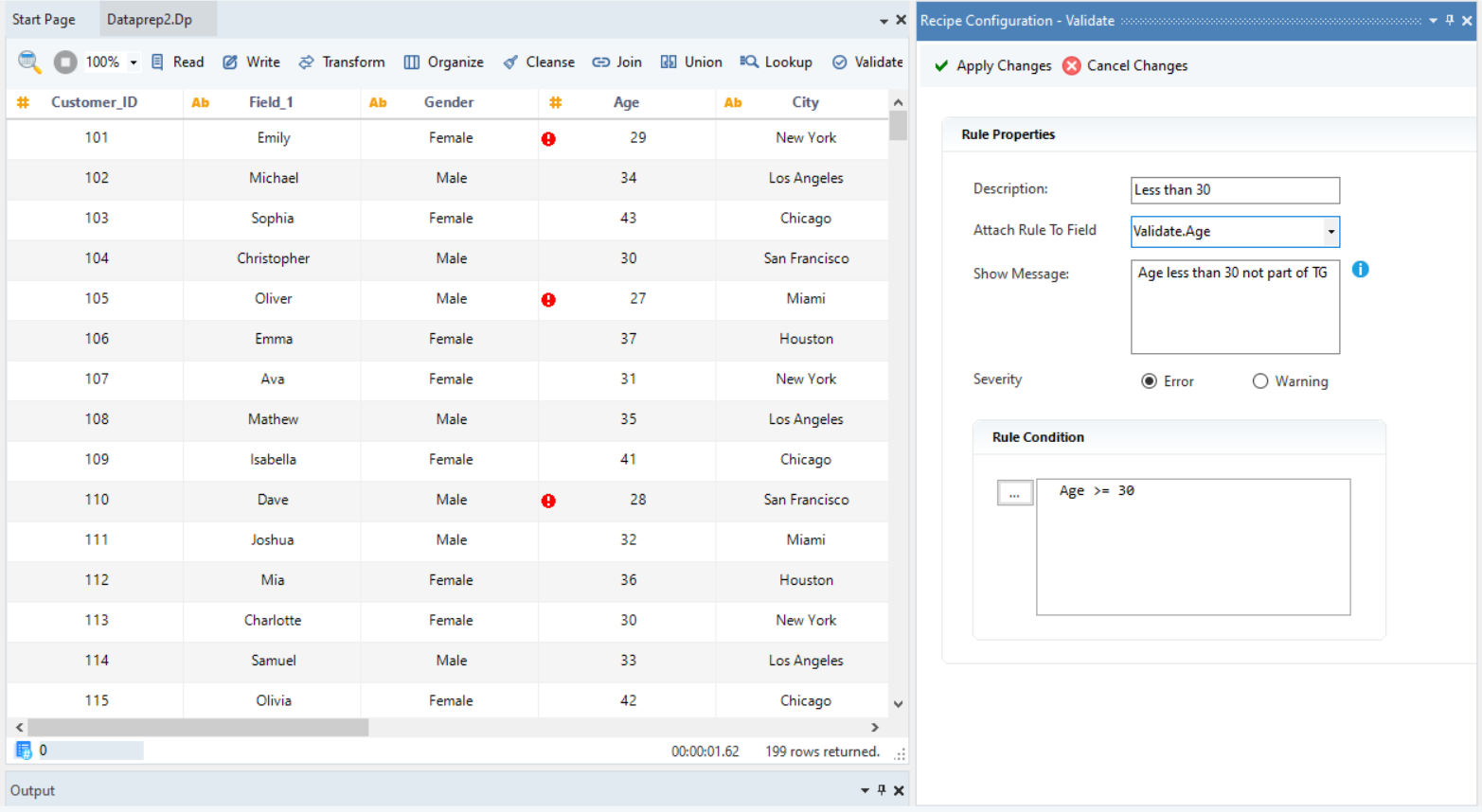

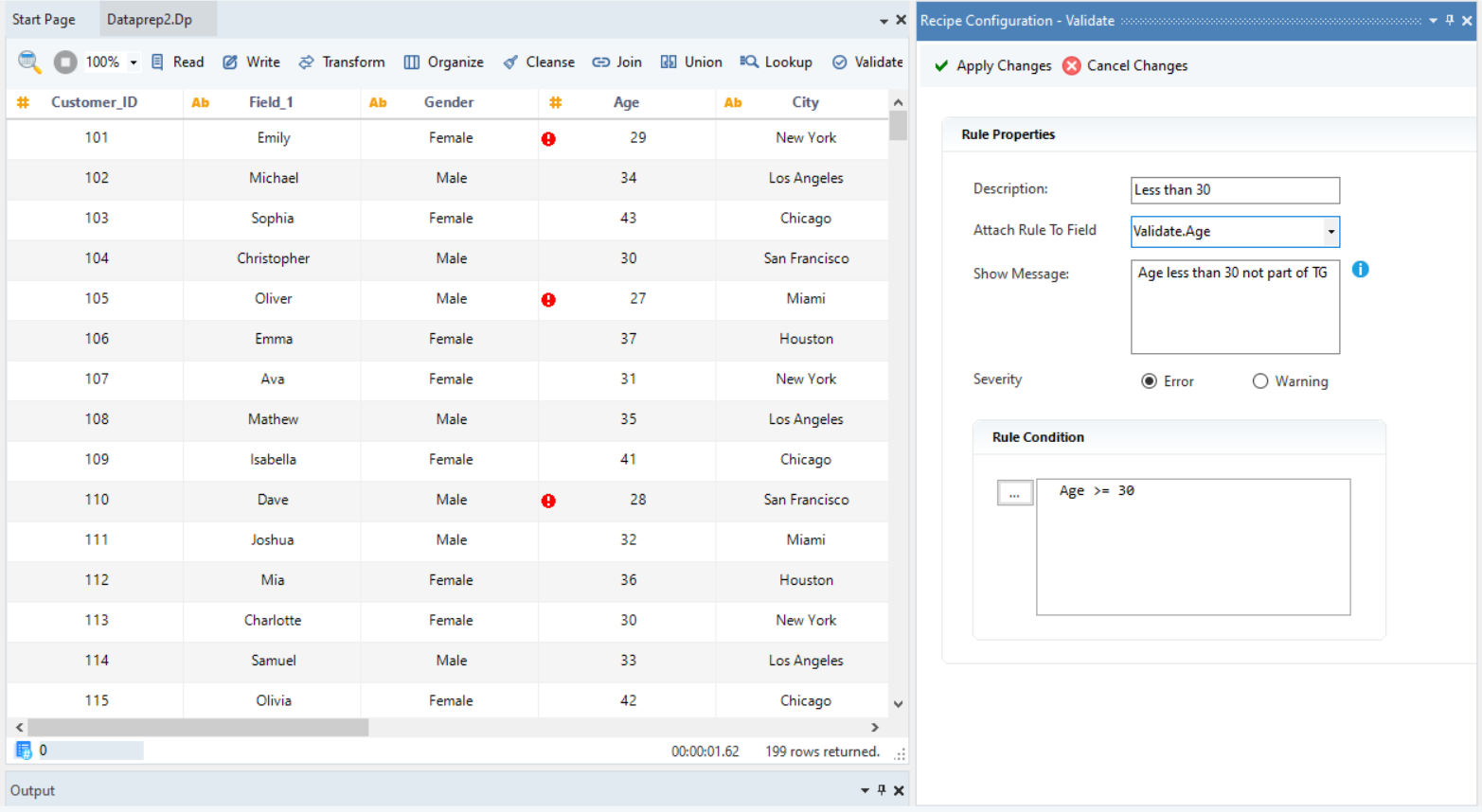

Astera is an enterprise-grade, unified data management solution with advanced data validation features. By offering agile data cleansing and correction capabilities, the tool empowers you to access trusted, accurate, and consistent data for reliable insights.

The platform also allows you to implement rigorous data validation checks and customize rules based on your specific requirements. Furthermore, by providing real-time data health checks, the platform provides instant feedback on the data quality, enabling you to keep track of changes.

For efficient data validation, Astera has a rich set of transformations that allow you to:

-

- Find and replace null/missing values.

- Convert the data formats and values into a common format.

- Remove duplicates from a column or the entire dataset.

- Apply custom validation rules to use relevant data for analysis

Entries flagged as erroneous in the data validation process

Other Key Features:

- Drag-and-drop Interface: The visual and interactive interface empowers you to simply drag the objects, manipulate the data, and write it to the destination of your choice without writing a single line of code.

- Connectivity to Wide Range of Sources: Astera allows you to seamlessly connect to on-premise systems as well as cloud-based sources. Moreover, now you can build API-based connectors for any integration or import connectors from Astera’s library of custom-built connectors.

- Built-in Transformations: Astera provides a comprehensive library of pre-built transformations such as join, reconcile, aggregate, normalize, and more allowing you to perform complex data operations with just a few clicks.

- Workflow Automation: The tool includes job scheduling and automation capabilities based on time-based and event-based triggers, eliminating manual intervention.

- Parallel Processing: Its industrial-strength ETL engine splits large data sets into smaller subsets that are processed in parallel to ensure high-speed integration, regardless of the data size and format.

- AI Integration: Astera is leveraging AI by incorporating it into its various operations such as data extraction, mapping, and modeling, simplifying the data management process.

See It in Action: Sign Up for a Demo

Curious about how Astera streamlines data validation? Sign up for a demo and explore all the features you can leverage to get analysis-ready data without writing a single line of code.

View Demo

2. Informatica

Informatica is a data management platform that allows users to perform critical data quality tasks, such as deduplication, standardization, enrichment, and validation. Users can identify, rectify, and track data quality issues both in the cloud and on-premises.

Key Features:

- Connectivity to Diverse Sources: The platform has built-in connectors, allowing users to connect with various source systems such as databases, file systems, or SaaS-based applications.

- Data Preparation: Informatica allows users to profile, standardize, and validate the data by using pre-built rules and accelerators.

- Data Monitoring: The solution provides users with visibility into the data set to detect and identify any discrepancies.

- Parallel Processing: Informatica enables users to run multiple jobs simultaneously by splitting up tasks to improve performance time.

3. Talend

Talend is a data quality and integration solution providing users with access to accurate data. Its data quality solution profiles, cleanses, and standardizes data across systems. Driven by machine-learning algorithms, it offers recommendations to correct and rectify data quality issues. Moreover, the built-in Trust Score provides an evaluation of the overall health of the data to identify discrepancies and irregularities within the dataset.

Key Features:

- Self-Service Data Integration: Talend’s self-service data integration platform allows users to build and deploy data integration jobs without writing a single line of code.

- Data Transformation: Talend offers a wide range of data transformation capabilities, including filtering, sorting, aggregating, and joining data.

- Data Preparation: Talend allows users to prepare the data, apply quality checks, such as uniqueness and format validation, and monitor the data’s health via Talend Trust Score.

- Data Security and Compliance: Talend allows users to protect sensitive information by providing role-based access and ensures compliance with regulations like GDPR and HIPAA.

4. Datameer

Datameer is a data preparation and transformation solution that converts raw data into a usable format for analysis. The platform is engineered for Snowflake, a cloud-based solution, managing all aspects of the data lifecycle, from exploration to preparation to sharing trusted datasets. By offering a spreadsheet-style interface, the platform allows users to navigate and interact with complex data in an intuitive manner.

Key Features:

- Data Preparation: Datameer’s self-service data preparation interface is spreadsheet-like, making it easy for users to explore, transform, and visualize data.

- Native integration with Snowflake: Datameer enables data engineers and analysts to transform data directly in Snowflake via a simple SQL code or code-free interface.

- Data Encoding: Datameer’s encoding feature automatically converts categorical data into a binary format for use in machine learning models.

- Data Catalog: Datameer’s catalog feature provides a centralized view of all data assets within an organization, with intelligent search capabilities.

5. Alteryx

Alteryx is a data preparation and analytics platform that enables access to timely insights. It works as a workflow designer, offering connectivity to various sources such as flat files, database connections, APIs, etc., – both on-premises and cloud, allowing users to transform and access data in a single platform. The platform leverages AI to provide recommendations regarding data quality improvements. This aids in validating, transforming, and filtering the data according to the requirements.

Key Features:

- Data Profiling: Alteryx Designer offers data profiling capabilities that allow users to understand the characteristics of data and identify potential problems.

- Data Quality: Alteryx enables users to uncover and validate data quality issues with its AI-powered recommendation systems.

- Data Governance: The platform allows users to track and manage data lineage, audit logs, and ensure role-based access control.

- Performance Monitoring: Alteryx Designer offers performance monitoring features that can be used to track the performance of data preparation and analytics workflows.

6. Data Ladder

Data Ladder is a data quality solution with built-in data profiling, cleansing, and deduplication capabilities. The software verifies the data before storing it in a database, offering real-time data quality validation. The platform has an intuitive visual interface, allowing effortless integration with custom-built or third-party applications.

Key Features:

- User-friendly Interface: Data Ladder offers a visual and interactive interface, enabling technical business users to process data in a code-free environment.

- Data Preparation: The platform allows users to discover, cleanse, validate, and match according to the business’ specific data quality requirements.

- Data Import: Data Ladder allows users to integrate data from multiple disparate sources, including file formats, relational databases, cloud storage, and APIs.

- Data Matching: The platform enables users to employ proprietary and industry-grade match algorithms, allowing them to define custom criteria and match confidence levels for exact, fuzzy, numeric, or phonetic matching.

7. Ataccama One

Ataccama One is a data management solution that offers data quality and validation features to improve the accuracy and reliability of the data. It ensures continuous data quality management by leveraging AI to automatically detect anomalies and irregularities and make changes as needed. Moreover, users can set custom rules to validate their data by using sentence-like conditions or the rich expressions the solution provides in an interactive interface.

Key Features:

- Data Quality: Ataccama One helps users improve the accuracy, completeness, and consistency of their data by offering data profiling, cleansing, enrichment, and validation capabilities.

- Data Catalog: Ataccama One enables users to discover, understand, and manage their data assets, including features for data search, lineage, and documentation.

- Data Lineage: Ataccama One allows users to track the flow of data through their systems to identify data quality issues and improve the accuracy of their data.

- AI Integration: Ataccama One uses AI and machine learning to automate data management tasks and to improve the accuracy of data quality checks.

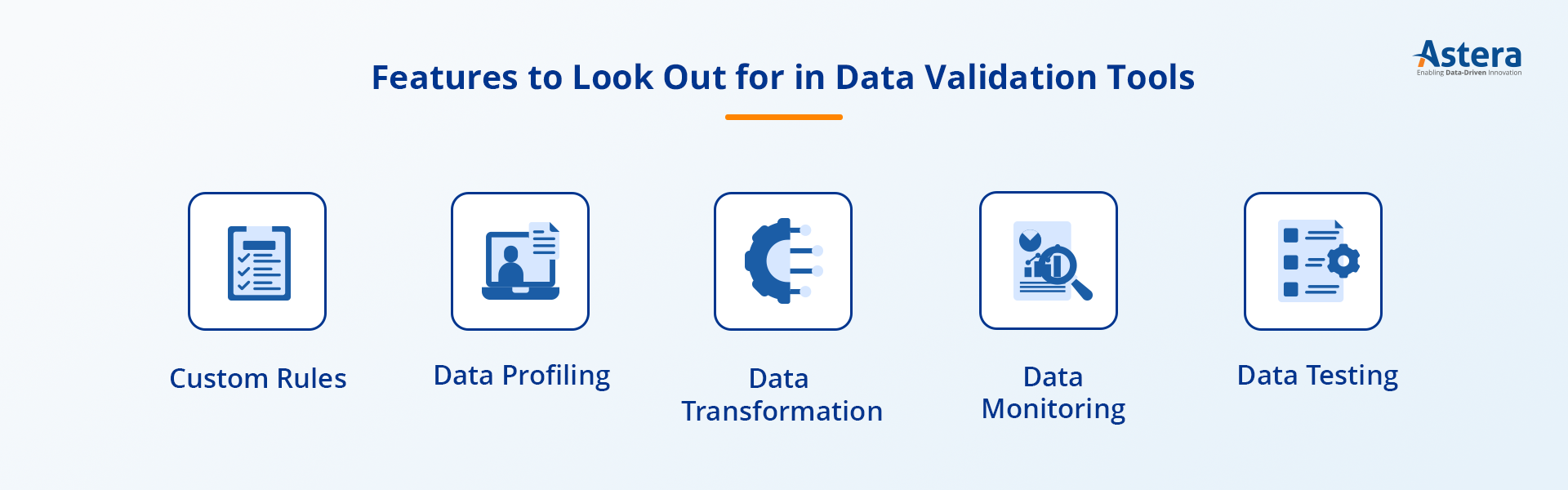

Features to Look Out for in Data Validation Tools

- Custom Rules

The ability to create specific rules, expressions, and conditions based on business requirements is crucial. A data validation tool should be able to tailor, customize, and modify the criteria based on evolving needs to ensure adaptability.

- Data Profiling

Data profiling enables an organization to analyze the current sources of its data and understand the structure, quality, and relationships between attributes. This overview of data highlights problems within the dataset, such as inaccuracies, inconsistencies, and irregularities. Therefore, it is essential for a data validation tool to provide an assessment of the data quality in real time.

- Data Transformation

Data is often in raw form and needs to be transformed into a usable format. A data validation tool should have features that include cleaning the dataset to account for missing values, converting inconsistent data into a standard format, joining/merging different datasets to provide a complete view, and, finally, enriching the data by adding new variables from external sources.

- Data Monitoring

Another vital feature of a data validation tool is the ability to monitor and track data over time and alert in case of errors, discrepancies, or anomalies. By informing users of any changes in data events, alerting can assist in responding, rectifying, and resolving mistakes promptly to preserve the overall quality of the data.

- Data Testing

Data testing or data validation refers to verifying whether the data meets the predefined conditions and constraints set so that the data aligns with the business objectives. By applying various data quality checks, the data is filtered and refined for consumption. This feature ensures that the data is healthy, trustworthy, compliant, and ready to be used for analysis.

Must-have features

While selecting a data validation tool, it is essential to look out for these key features:

- Real-time health checks: The ability to track and monitor the data in real-time enables users to identify and resolve data quality issues as they arise. This offers a complete view of the data’s health, highlighting opportunities for cleansing, transforming, standardizing, and validating the data.

- Interactive Data Grid: The ability to preview, analyze, and interact with data offers the flexibility to modify it as needed. Users should be able to make the necessary changes within the grid to ensure data accuracy and consistency.

- Automation: A data validation tool should have a built-in scheduler so that whenever a file is dropped in the mailbox, the process automatically runs the data against the predefined rules, ensuring accuracy and automating the process.

Criteria for Selecting the Right Data Validation Tool

There are several factors that influence the decision regarding the data quality tools selection process. It is important to ensure that the features and capabilities of a data validation tool are aligned with the organization’s requirements.

The following factors stand out:

- Data Requirements: A data validation tool should be capable of dealing with diverse data structures, formats, and sources. An organization may be dealing with structured, semi-structured, and unstructured data. Hence the data validation tool should be flexible enough to accommodate the unique demands of the various data types while maintaining quality.

- Scalability: As data volume continues to grow, an organization should choose a solution that can handle vast amounts of data efficiently. The tool should, therefore, exhibit scalability and apply data quality checks on large datasets without compromising the speed and accuracy of data processing.

- User-friendly Interface: Setting up custom data validation rules and checks is complex for users with a non-technical background, emphasizing the need for a simple and easy-to-use tool. By offering an intuitive approach to validate the data, such as a drag-and-drop interface and point-and-click navigation, the tool results in short learning curves and few errors, streamlining the validation process.

- Ease of Integration: Finally, data validation tools should integrate easily with existing systems and workflows. Compatibility and connectivity with various data types and sources within an organization, such as databases, cloud systems, and APIs, are crucial to integrating the data effortlessly.

Benefits of Using Data Validation Tools

Without proper data validation mechanisms, enterprises might run into issues such as skewed analysis, flawed insights, and delayed or inaccurate decision-making. A data validation tool enhances an organization’s data management efforts for several reasons:

- Improved Data Accuracy

Data validation solutions prevent the spread of erroneous data throughout an organization’s systems by detecting mistakes early in the data entry or import process. This allows for well-informed decisions and accurate analysis.

- Resource Efficiency

Manual data validation takes time and is prone to human errors. Data validation software automates the process, quickly discovering anomalies and irregularities without requiring extensive human interaction. This automation saves time, allowing teams to focus on more strategic responsibilities.

- Regulatory Adherence

Maintaining correct data is not only desirable but necessary for businesses governed by strict norms and standards. Data validation solutions help organizations achieve compliance obligations by guaranteeing the correctness and integrity of their data. Compliance, in turn, helps avoid penalties and instills confidence in stakeholders who rely on accurate data for audits and reporting.

Final Words

Validation is essential for ensuring the integrity and trustworthiness of data throughout its lifecycle. As new data sources emerge, it is crucial to apply business-specific data validation rules and conditions to ensure that it is in the desired format.

Carefully analyzing the factors discussed above will help you choose the right data validation that meets your data requirements. Investing in data validation tools, such as Astera, can help your business avoid costly errors, ensure operational efficiency, and gain a competitive edge.

Want to convert raw data into a usable format? Download Astera’s 14-day free trial today!